This might be beating a dead horse, but QuickTime trulysucks.

For those using 16-bit (deep color) applications, always use the Force 16-bit encoding option, it is the highestquality and surprisingly, it is often the lowest data rate.

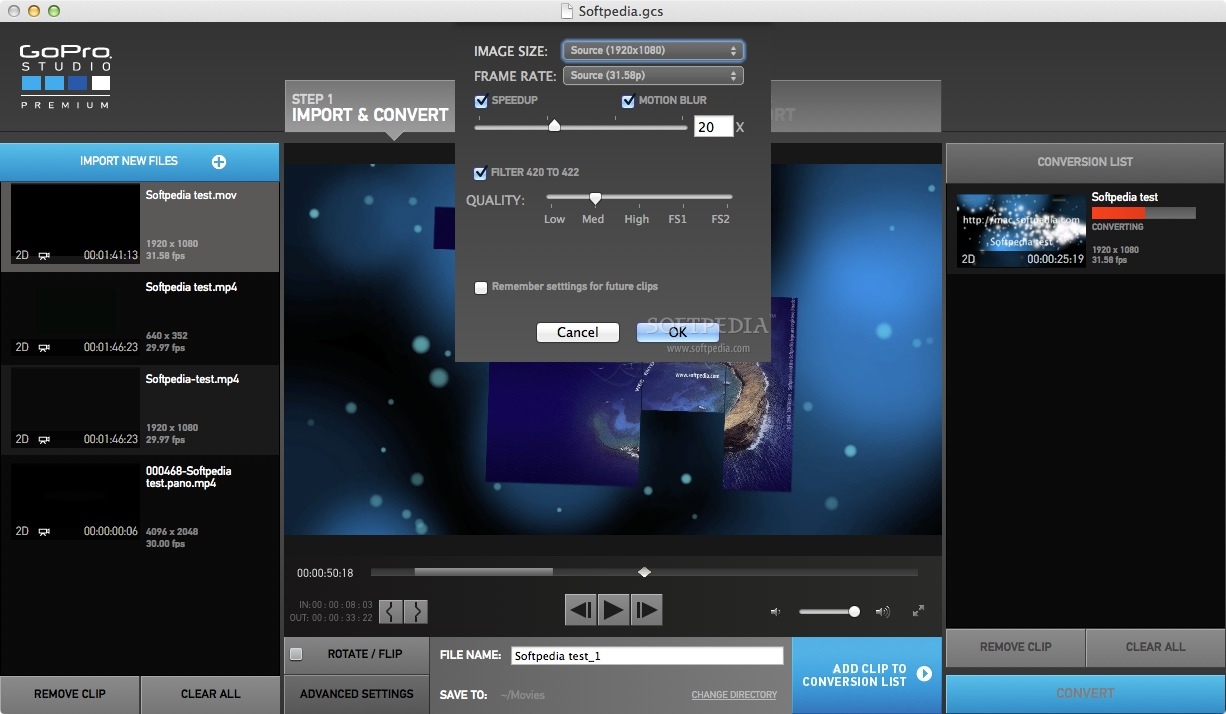

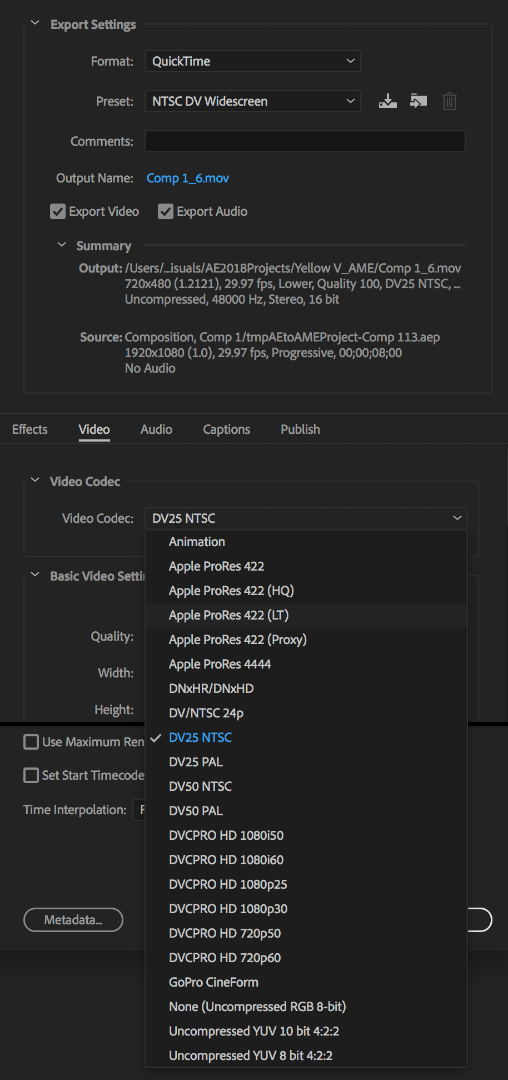

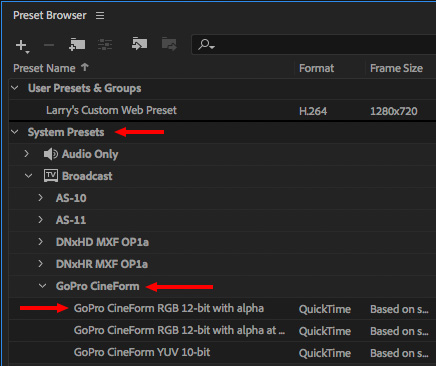

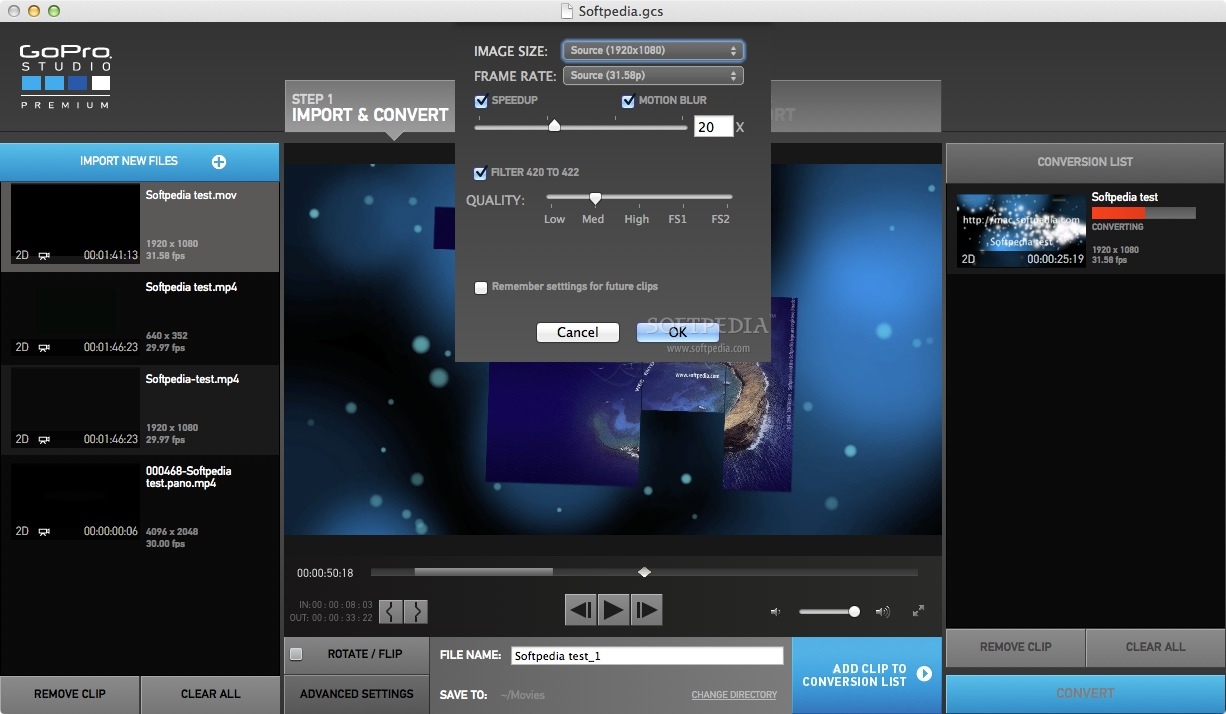

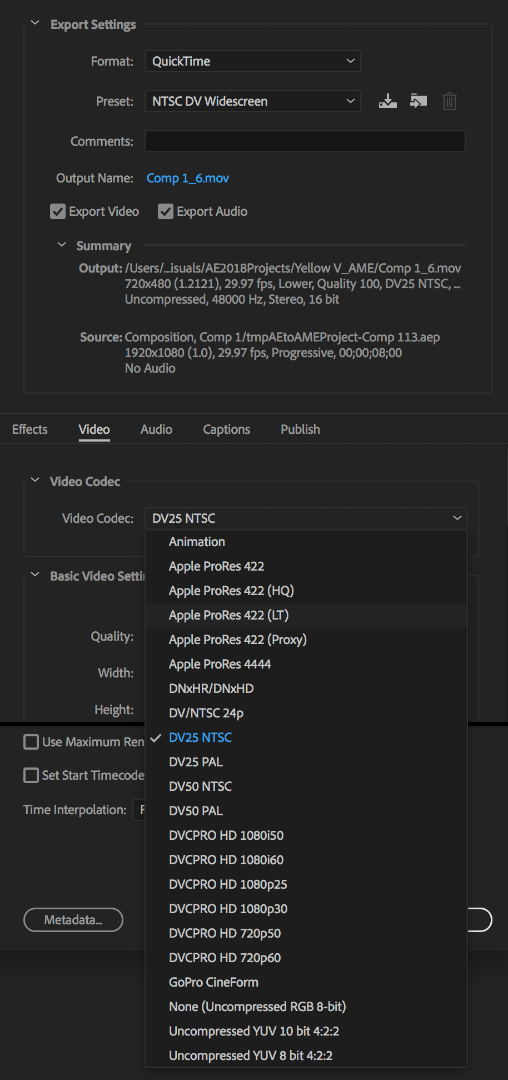

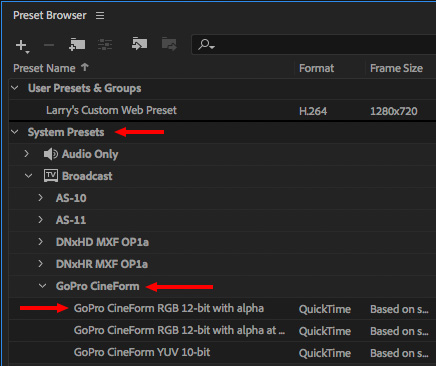

Cineform codec is based on Quicktime, no matter Windows or MAC OS. Especially only Quicktime7 support Cineform RAW real-time sync without rendering, NOT Quicktime X. For windows platform and no matter taking Cineform as a core in post or transcoding to ProRes via Kinestation, you need to install Quicktime7. Convert 360 Videos: Choose from a range of resolutions (5.6K, 4K, custom) 1 and codecs (HEVC, H.264, Apple ProRes and CineForm). Frame Grab: Never miss a great shot. Capture incredible photos from any of your 360 or traditional videos by grabbing a frame. In most cases Quicktime is a bad idea on Windows machines, except GoPro Cineform. My new favourite codec is GoPro Cineform, because of it’s performance in the actual version of Premiere Pro. When looking at quality, there is no visible difference between dnxhd and cineform. Both are good mezzanine codecs. The GoPro CineForm codec is a cross-platform intermediate codec designed for editing high-resolution and visually lossless footage.Many workflows involve creating and using CineForm files: GoPro Studio, Autopano Video, After Effects, Premiere Pro Learn more about Cineform here.

QuickTime loves 8-bit, it prefers it greatly, and supportfor deep color is difficult at best. Overthe years we tried to make the 16-bit the preferred mode for our codec within QuickTime, yet there are many video tools that broke when we did this. The compromise was to add the Force 16-bitinto the QuickTime compression option, to allow user to control the codecspixel type preference – applications that can handle 16-bit will benefit, andapplications that don’t, still work.

Using After Effects for my test environment (but the sameapplies to other QuickTime enabled deep color applications.) I created a smooth gradient 16-bit image, then encoded itat 8-bit using using a 8-bit composite, 16-bit using a 16-bit composite and 16-bit using a16-bit composite with Force mode enabled (pictured above.)

Without post color correction, all three encodes looked pretty much the same*, yet the data rates are very different.

* Note: QuickTime screws up the gamma for the middle option, so with the image gamma corrected to compensate, they looked the same.

The resulting file sizes for 1080p 4:4:4 CineForm encodes at Filmscan quality:

16-bit – 28.4Mbytes/s

Our instincts that higher bit-rate is higher quality will lead us astray in this case.

Under color correction you can see the difference, so I went extreme using this curve:

The result are beautiful, really a great demo forwavelets.

Zooming in the results are still great. Nothing was lost with the smallest of the output files.

Of course we know 8-bit will be bad

We also seeing the subtle wavelet compression ringing atthe 8-bit contours enhanced by this extreme color correction. This is normal, yet it shows you somethingabout the CineForm codec, it always uses deep color precision. 8-bit looks better using more than 8-bits tostore it. That ringing mostly disappearsusing an 8-bit composite, an 8-bit DCT compressor could not do as well.

Storing 8-bit values into a 12-bit encoder, steps of 1,1,1,1,2,2,2,2 (in 8-bit gradients are clipped producing these flat spots) are encoded as 16,16,16,16,32,32,32,32, the larger step does take more bits to encode – all with the aim to deliver higher quality. Most compression likes continuous tones and gradients, edges are harder. Here the 8-bit breaks the smooth gradients into contours which have edges. The clean 16-bit forced encode above is all gradients, no edges, result in a smaller, smooth, beautiful image.

Now for the QuickTime craziness, 16-bit without forcing16-bit.

The image is dithered. This is the “magic” of QuickTime, I didn’t ask for dithering, I didn’twant dithering. Dithering is why the file is so big when compressed. QuickTime is given a 16-bit format, to acodec that can do 16-bit, but sees it can also do 8-bit, so it dithers to8-bit, screws up the gamma, then gives that to the encoder. Now nearly every pixel has an edge, therefore a lot more information to encode. CineForm still successfully encodes dithered images with good results, yetthis is not want you expect. If you wanted noise, you can add that as need, you don't want your video interface (QuickTime) to add noise for you.

If anyone can explain why Quicktime does this, I would loveto not have users have to manually select “Force 16-bit encoding”.

P.S. real world deep 10/12-bit sources pretty much always produce smaller files than 8-bit. This was an extreme example to show way this is happening.

Of course we know 8-bit will be bad

Of course we know 8-bit will be bad